AI powered Self-Assessment tool

Time frame:

10 months part-time

My role:

Product Designer

Team members:

Product Manager: Nancy Gao

Lead engineer: Jesse Merhi

Engineer: Hamish Cox

Platform:

Apex Wrapped, desktop and mobile

Tools:

Figma, Figjam, Confluence, Jira, Atlassian AI & Chat GPT (model training)

Project Overview

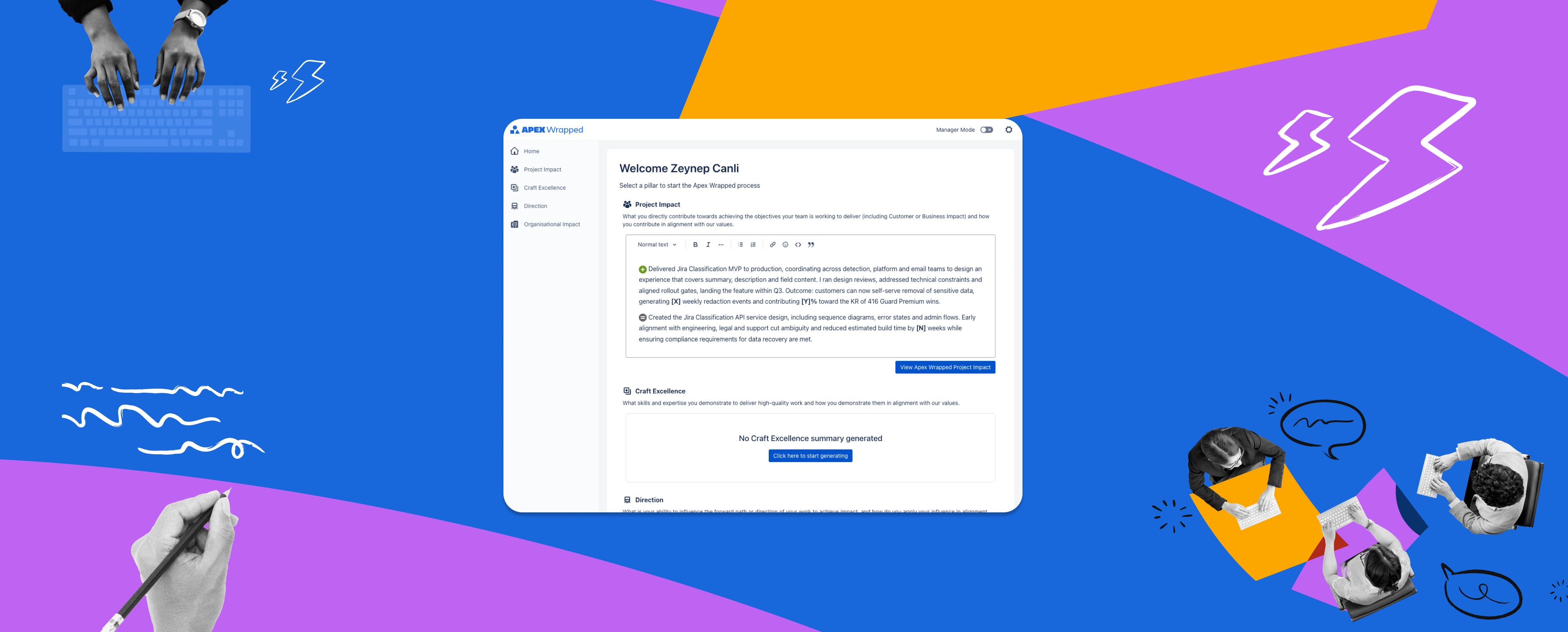

APEX Wrapped is an internal AI-powered tool I designed to help Atlassians write their performance self-assessments faster and more effectively. Every six months, thousands of employees spend hours recalling and compiling work. APEX Wrapped streamlines this by pulling relevant content, sorting it by performance pillars (e.g. Craft Excellence, Org Impact), and generating a draft summary.

As the sole designer, I led the end-to-end design over 10 months (part-time), collaborating closely with engineers, conducting user testing, and supporting internal rollout. The tool served over 1,800 users and was widely promoted internally.

The Problem

Self-assessments at Atlassian were time-consuming and stressful. Employees often spent up to 10 hours per cycle collecting links and notes — time not spent coding, shipping, or supporting customers. Many struggled to recall six months' worth of work, let alone articulate it succinctly.

In one cycle, only ~35% of engineers had completed their self-assessments by the deadline, prompting last-minute Slack reminders. The week reviews were due, productivity across the company dropped: meetings were postponed, projects stalled, and many worked nights or weekends. The process led to burnout, procrastination, and lost productivity.

[Feedback from Atlassian's hidden for confidentiality]

The Opportunity

We saw a chance to improve the process by combining AI and Atlassian's internal integrations. With access to Jira, Confluence, Atlas, and Kudos data, we could automate much of the legwork.

This aligned with a company-wide OKR focused on improving review efficiency. Saving even 5 hours per engineer per cycle could reclaim tens of thousands of hours annually.

Design Process

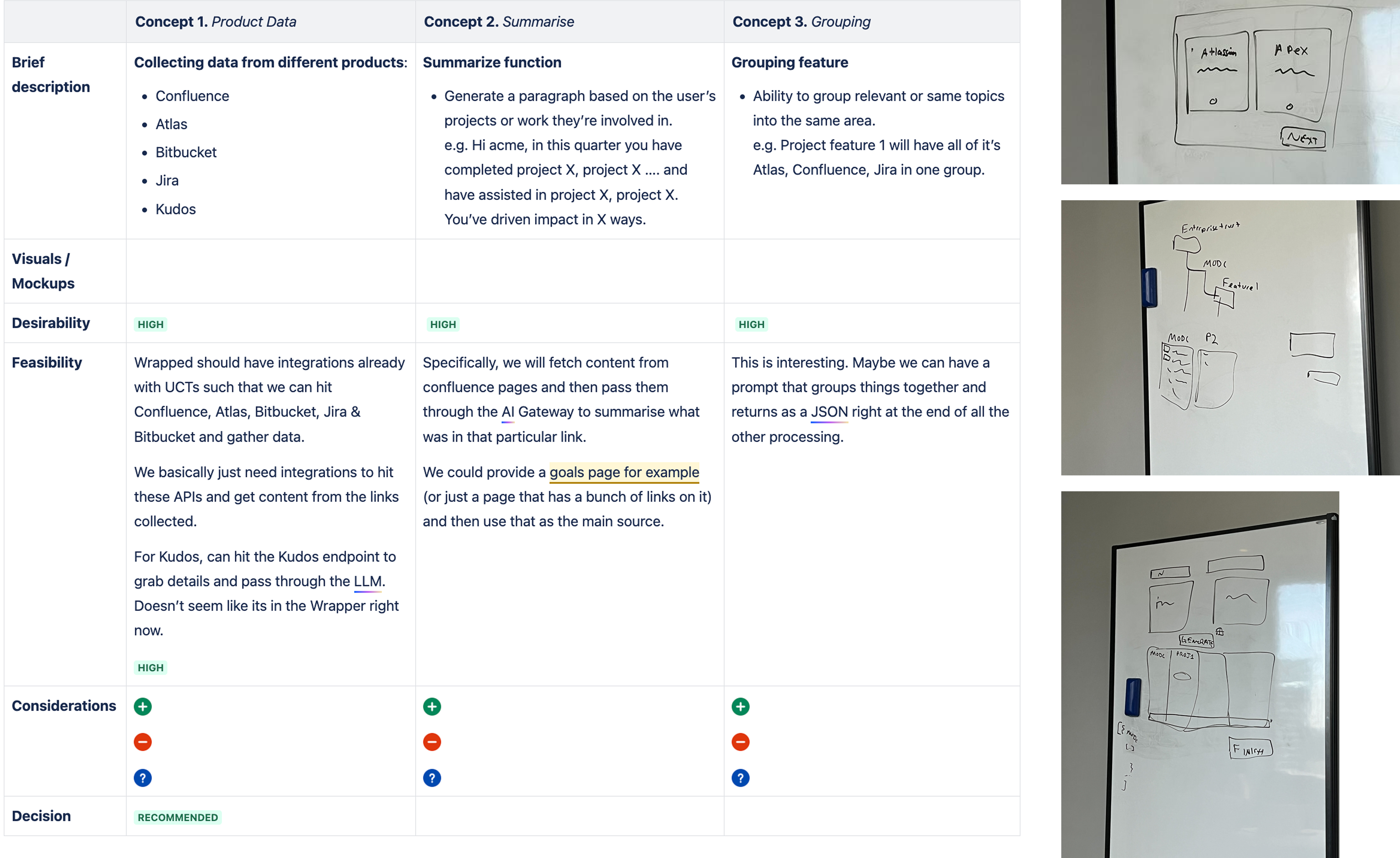

Early Ideation (ShipIt Hackathon)

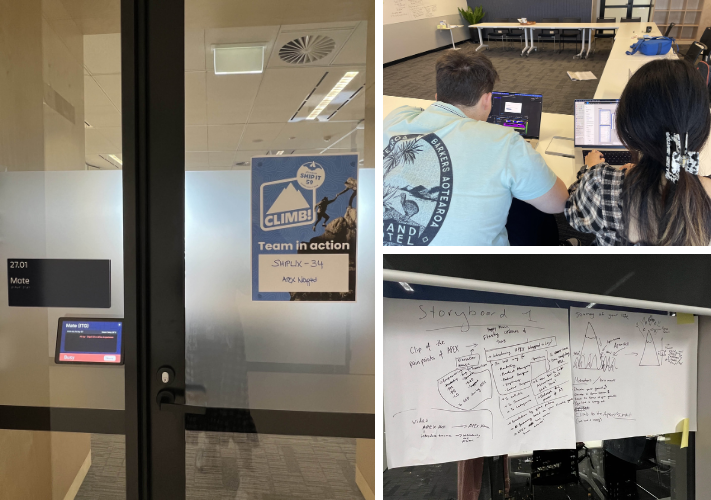

Our team — one PM, five engineers, and myself as designer — kicked off with a 24-hour ShipIt hackathon. Inspired by Spotify's year-in-review, we named the project "APEX Wrapped."

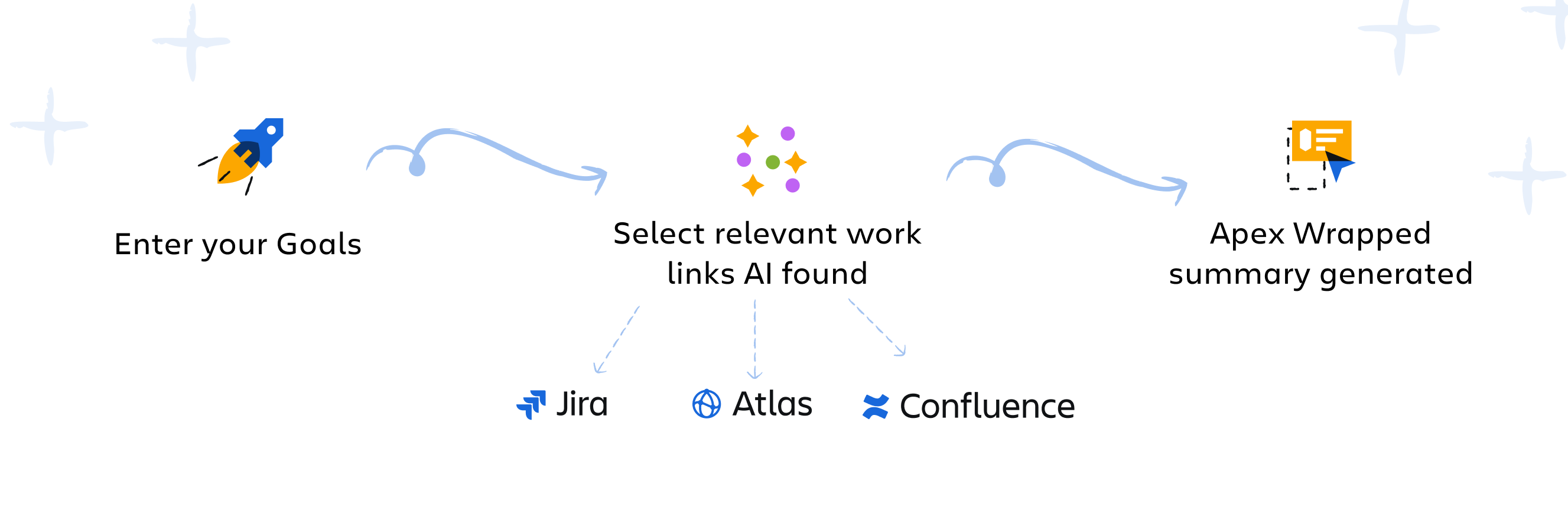

We scoped the experience as a three-step flow:

- Connect your data

- Pick what's important

- Get a summary

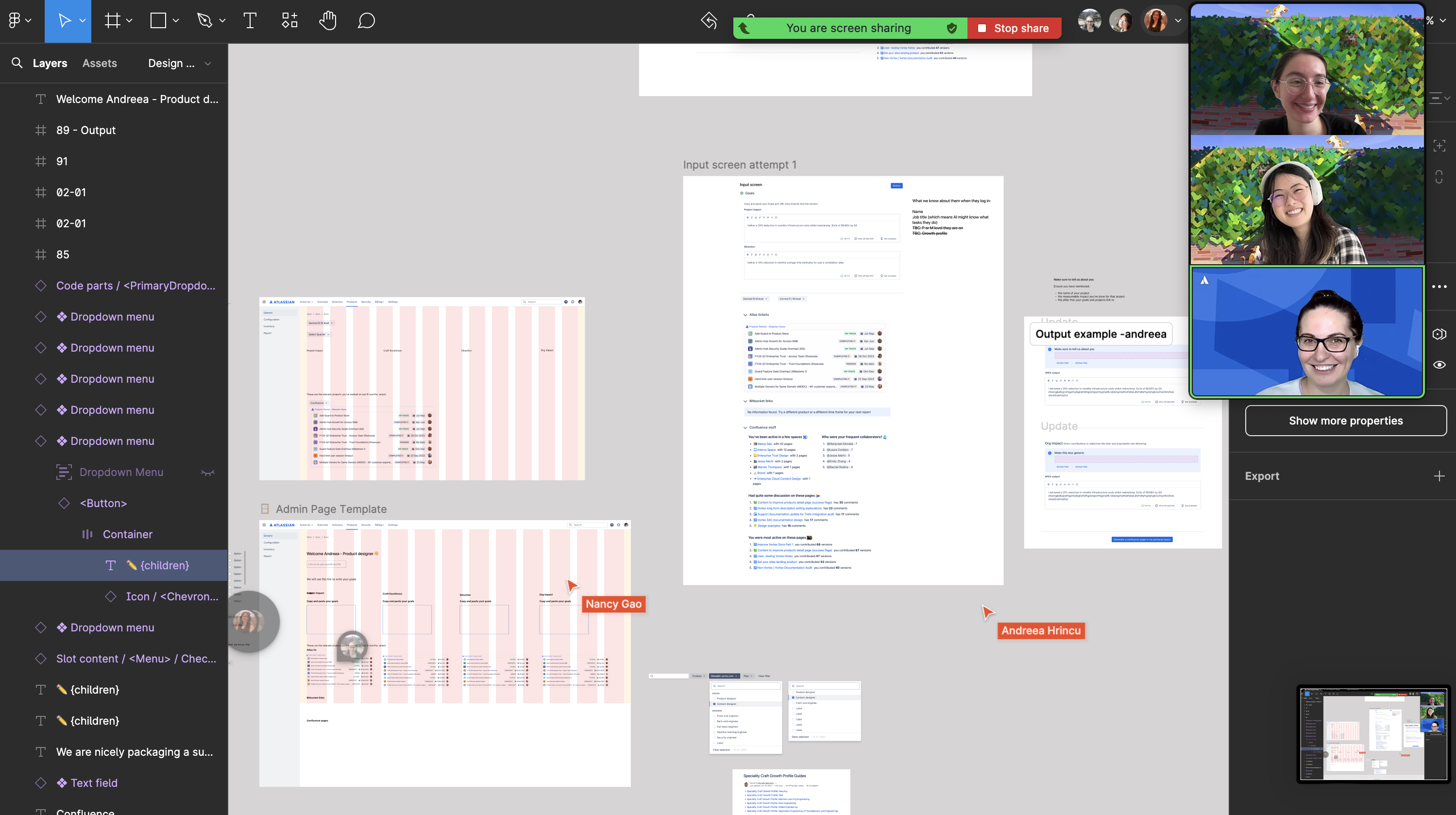

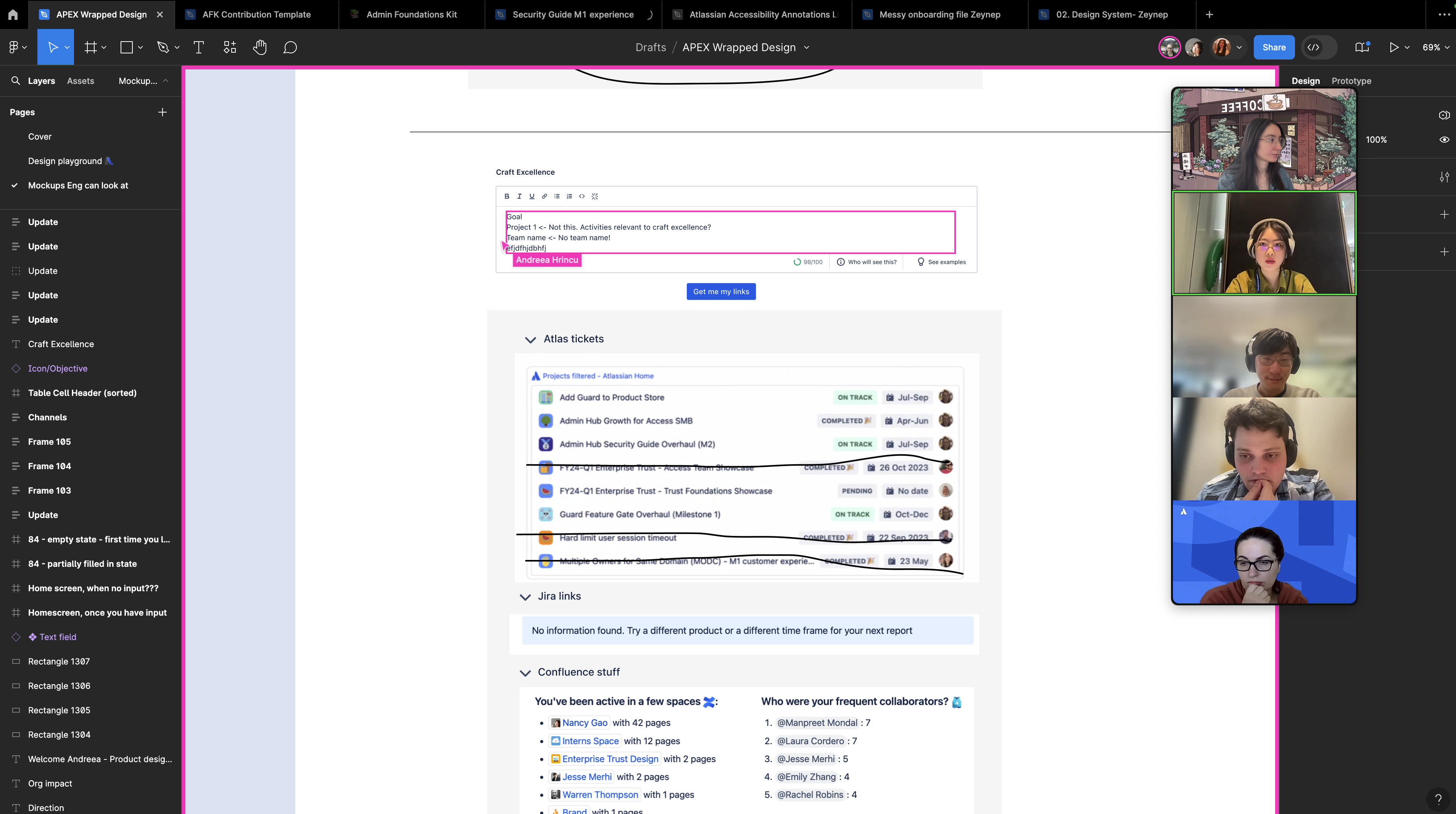

I produced low-fidelity wireframes for an intro page, input form, results list, and summary view.

Key design choices were set here: letting users choose which items to include (to maintain trust) and grouping links by relevance to avoid overwhelming them. Working side by side with engineers, we built a clickable prototype that fetched Jira and Confluence items and generated a rough draft summary.

The prototype was raw, but it was enough to win "Most Useful Hack" at ShipIt — and caught the attention of R&D leaders who encouraged us to keep going.

Further Iteration & Prototyping

After ShipIt, we continued development in spare cycles. I took a lean UX approach: rapid iterations, weekly design/dev syncs, and constant feedback.

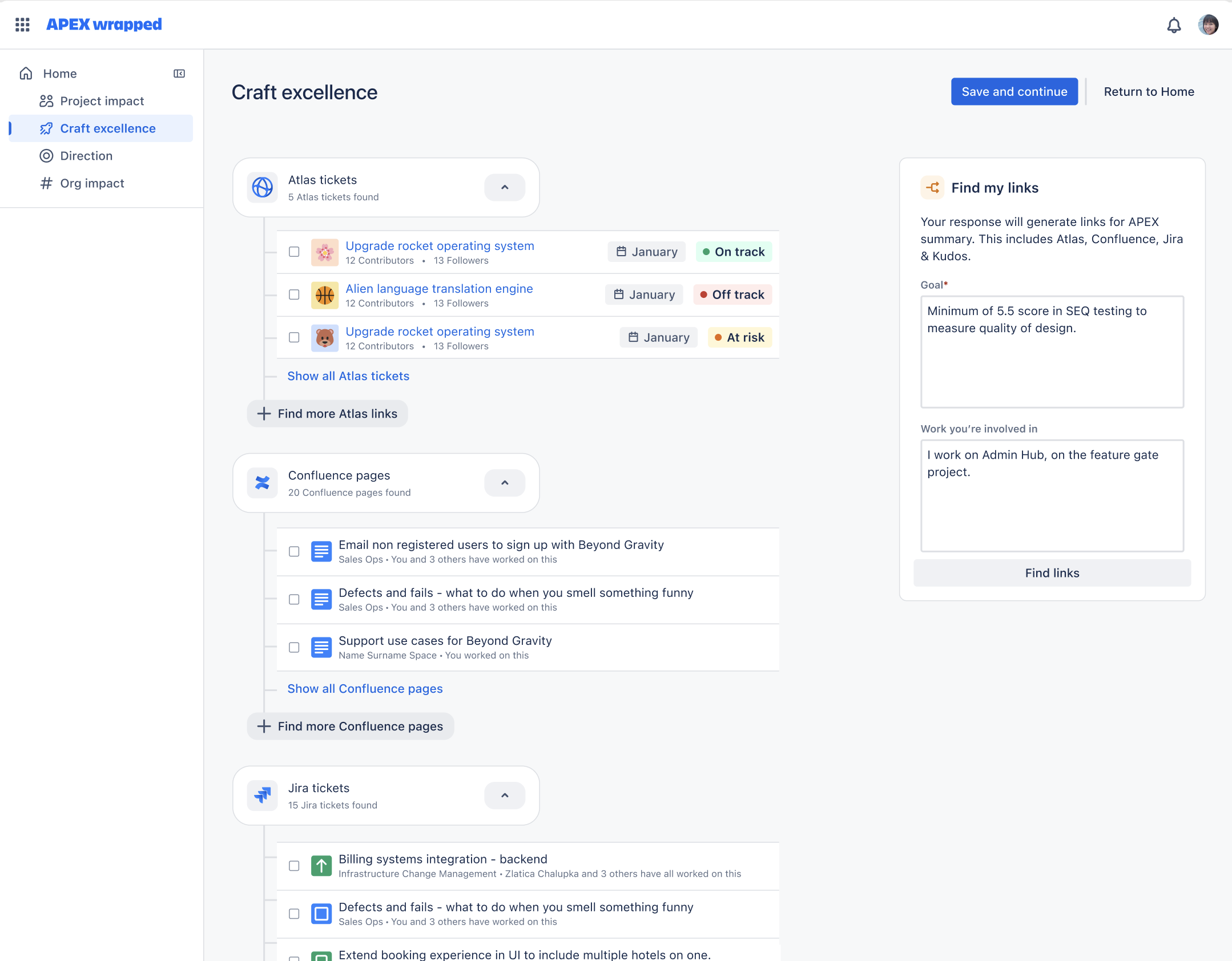

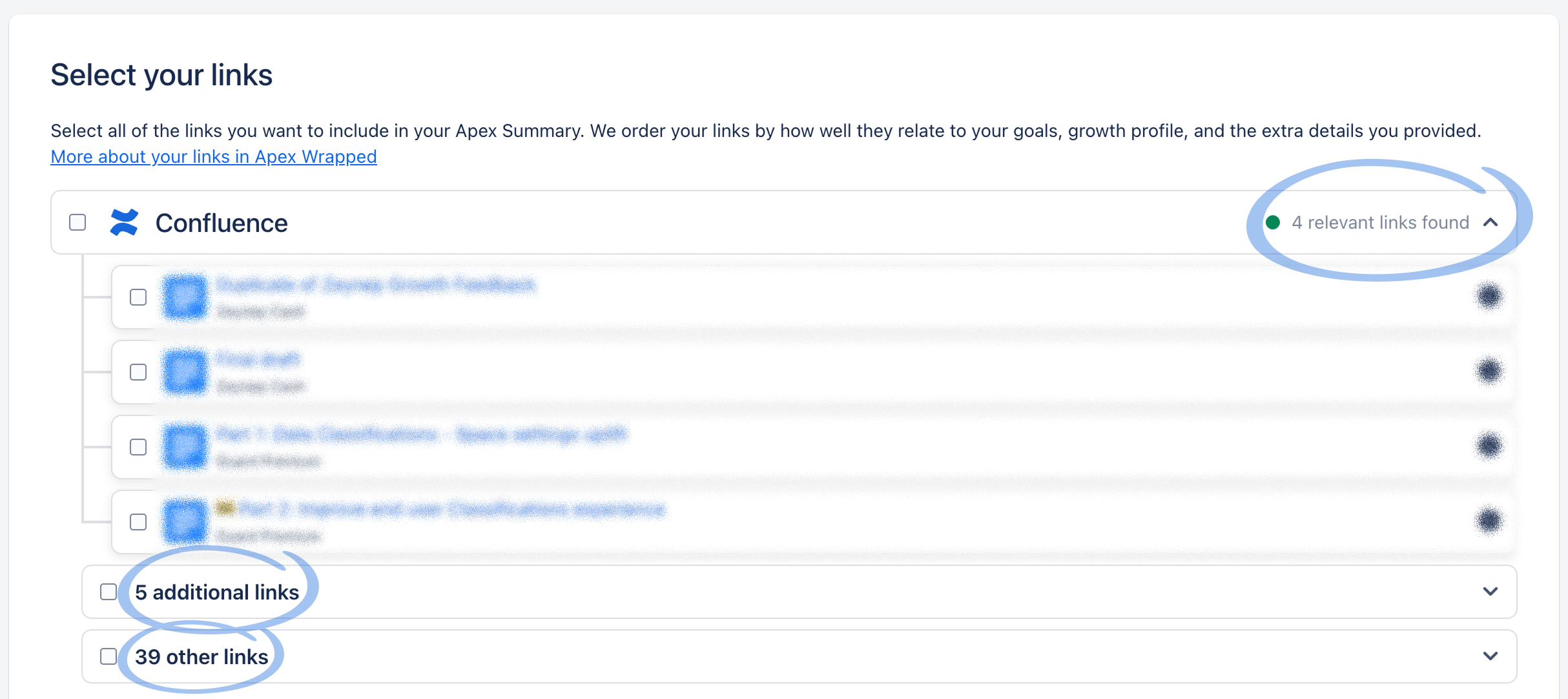

A key challenge was data volume. Active users could generate dozens of items. To keep things manageable, we introduced three-tier categorization: Relevant Links, Additional Links, and Other Links. Accordion-style lists let users focus first on high-value items. Tooltips explained why each item appeared (e.g. "Matched your goal keyword"), building transparency and confidence in the AI.

Blitz Usability Testing

I iterated rapidly, sometimes updating the prototype the same day. This fast loop caught usability issues early and kept the product intuitive. Formal research wasn't feasible, so I ran a "blitz" usability week with six 30-minute sessions. Employees from engineering and product roles walked through the prototype and thought aloud.

Findings led to several impactful changes:

Manager Mode:

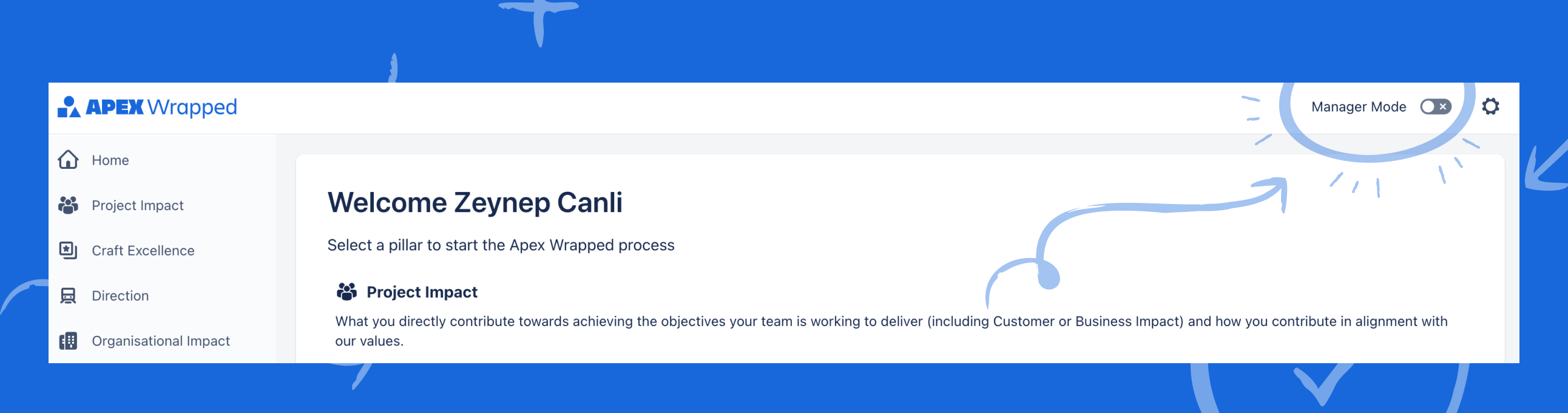

Added a toggle after managers asked whether the tool considered leadership responsibilities. Enabling it swapped in manager-specific pillars (e.g. People Leadership).

Added a toggle after managers asked whether the tool considered leadership responsibilities. Enabling it swapped in manager-specific pillars (e.g. People Leadership).Onboarding clarity:

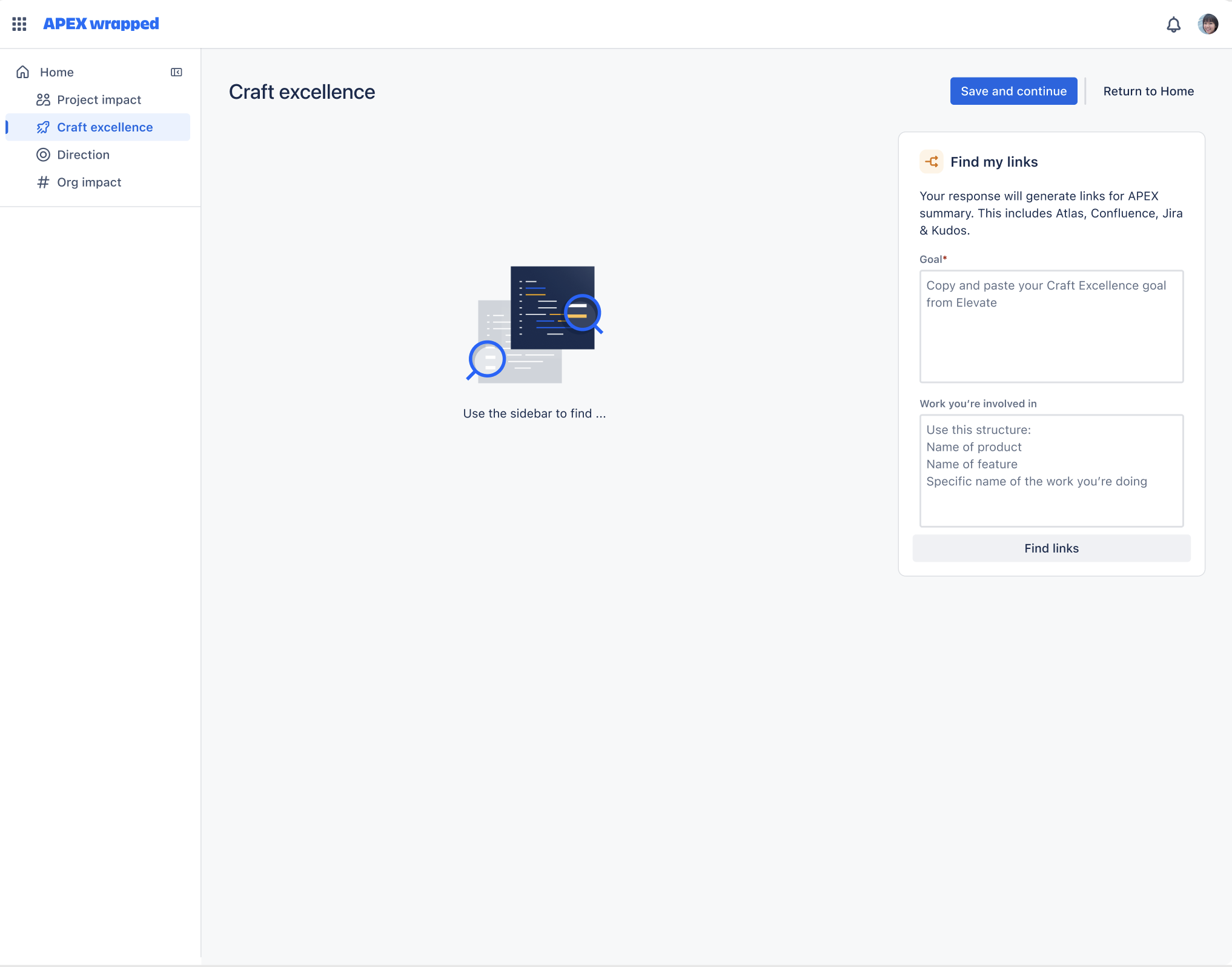

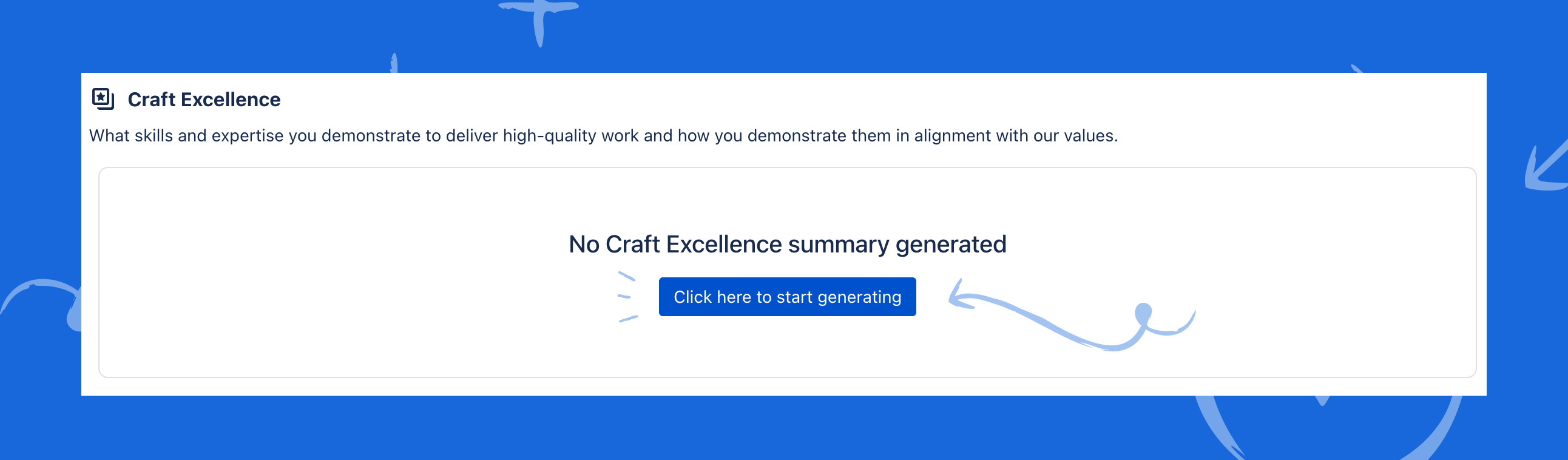

Users landing on an empty pillar page were confused, so we added clearer copy and a home-screen nudge: "Click a pillar to begin generating your summary."

Users landing on an empty pillar page were confused, so we added clearer copy and a home-screen nudge: "Click a pillar to begin generating your summary."Expectation setting:

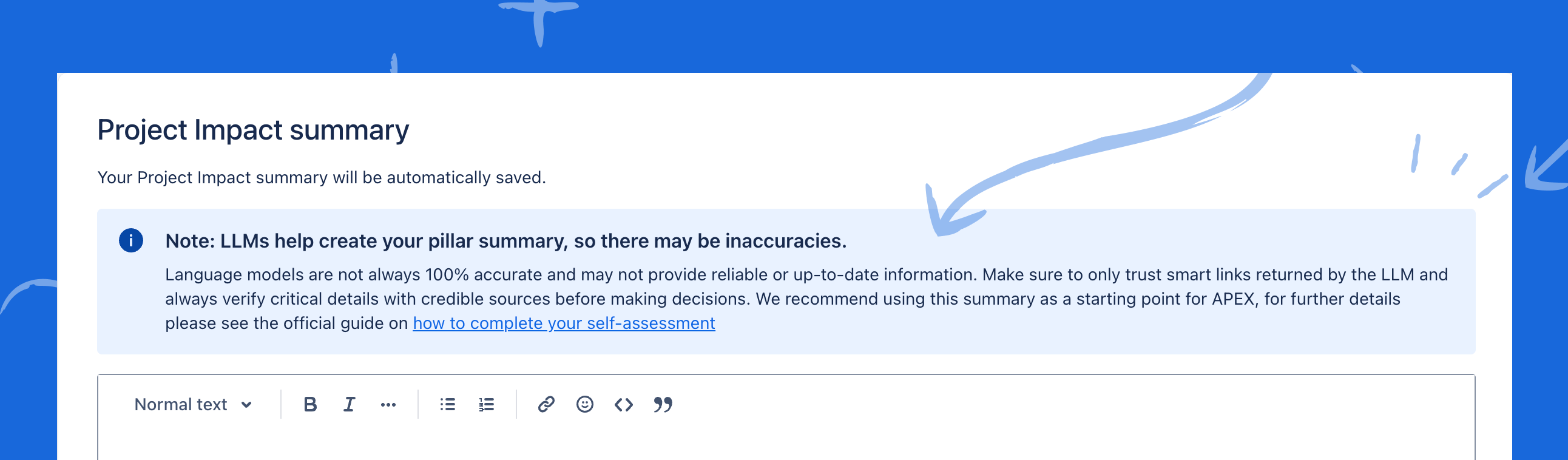

Testers loved the AI text but worried about accuracy. We added reminders that the summary was a draft — a starting point to edit and personalize.

Testers loved the AI text but worried about accuracy. We added reminders that the summary was a draft — a starting point to edit and personalize.Final Solution

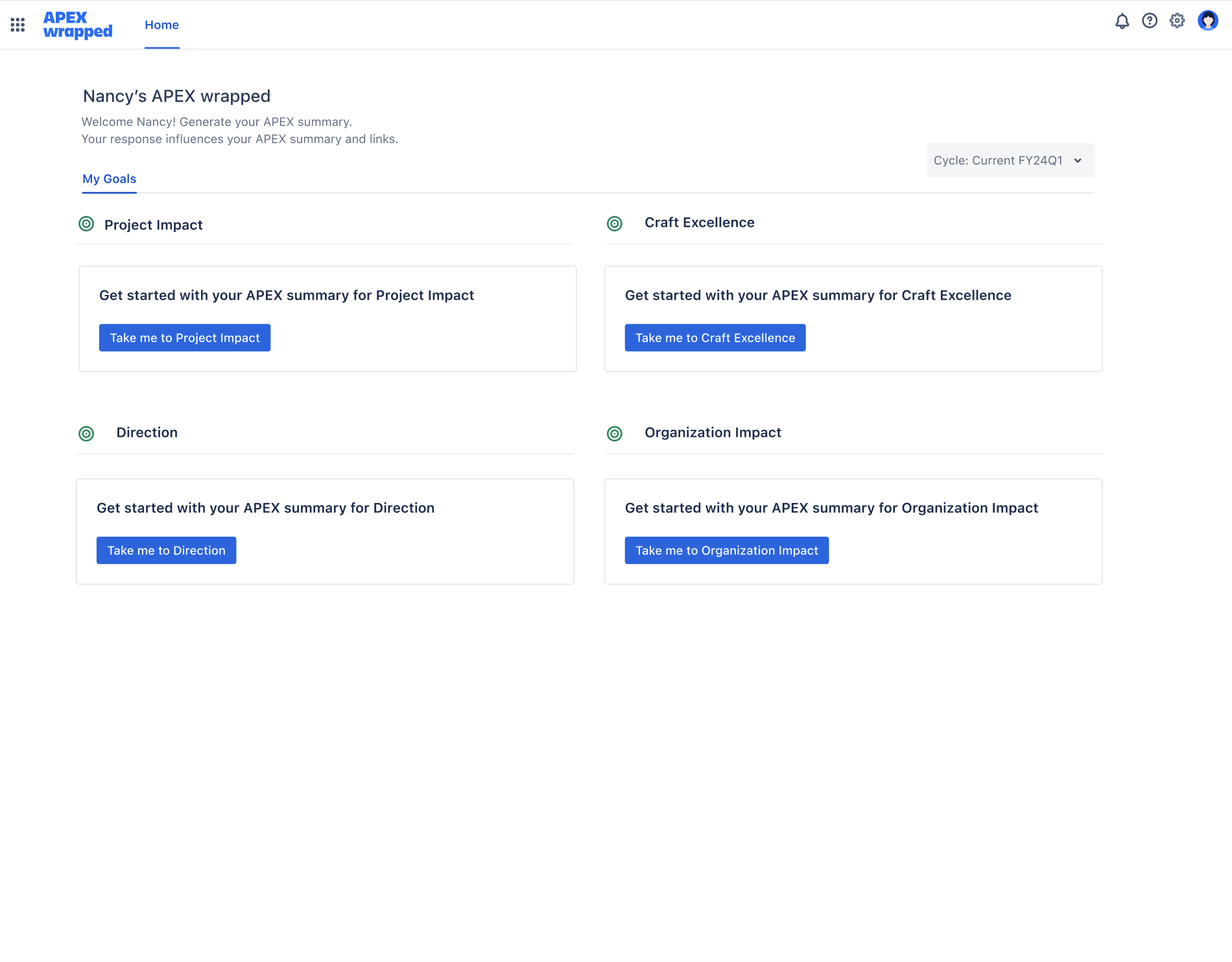

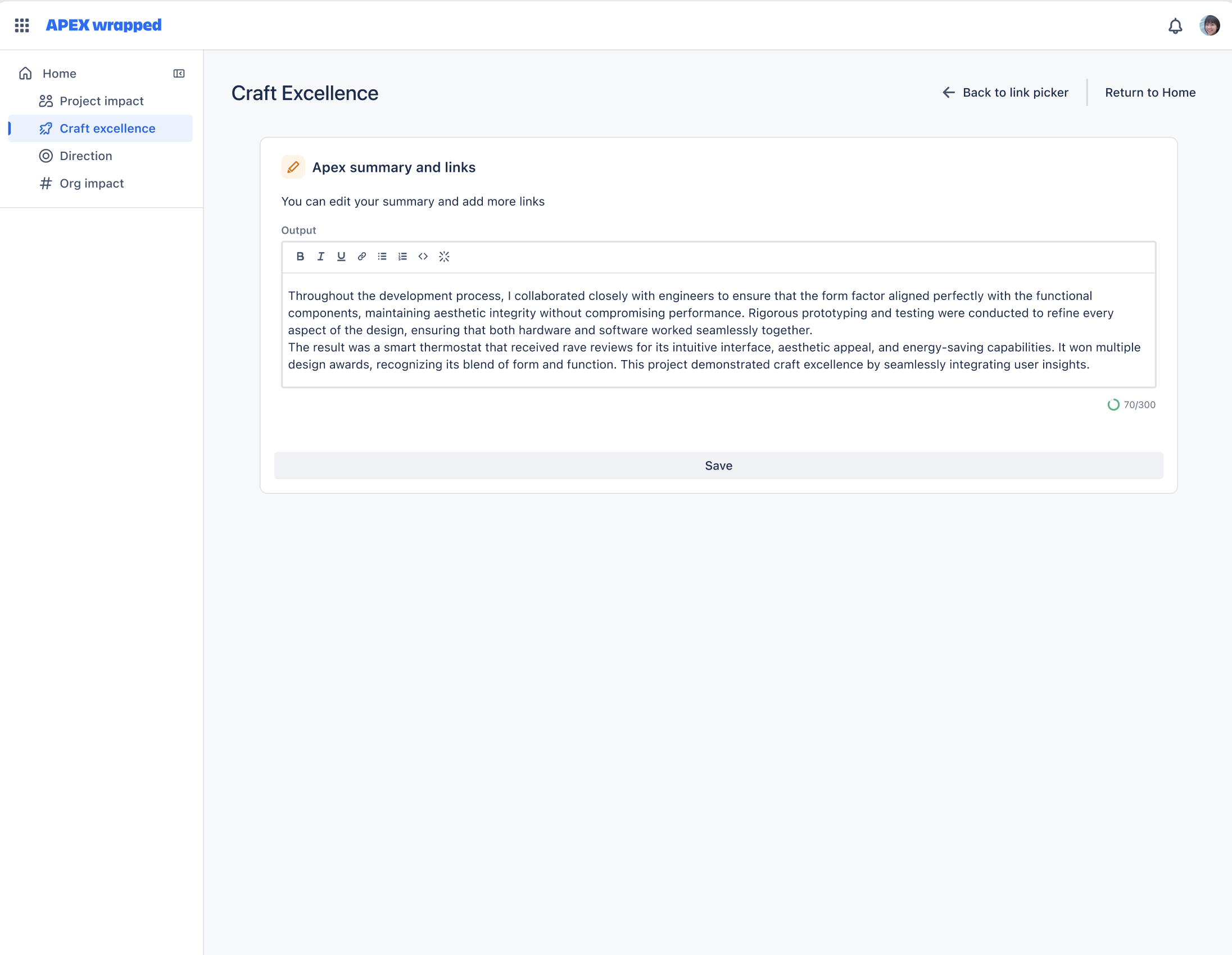

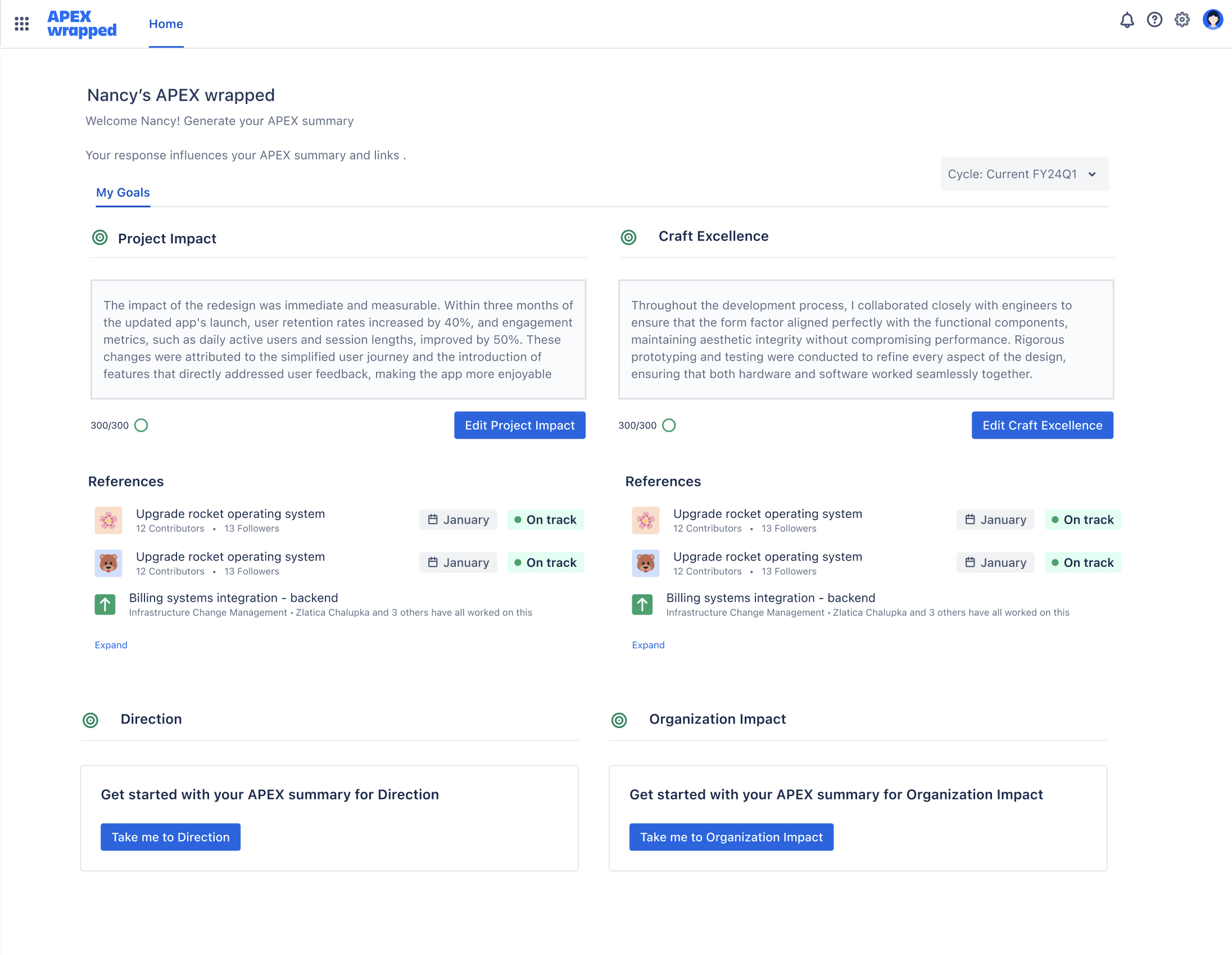

APEX Wrapped evolved into a three-step web app:

- Find your work – Fetches Jira issues, Confluence pages, Atlas updates, and Kudos. Results appear in Relevant, Additional, and Other categories.

- Select & curate – Users pick which items represent their achievements, with tooltips explaining why items were surfaced.

- Generate summary – AI compiles selections into a coherent ~300-word narrative, highlighting outcomes and metrics where possible.

The home page displayed each pillar with completion status, creating a checklist feel. The branding was slightly playful ("Wrapped") to reduce dread, while visuals stayed aligned with Atlassian's design system.

In the end, a multi-hour ordeal became a guided 10-minute process. Users stayed in control, ensuring outputs felt authentic rather than blindly AI-generated.

Rollout & Impact

To launch company-wide, we worked with Design Ops and internal comms. We published a Confluence guide, recorded a Loom demo, and opened a #help-apex-wrapped Slack channel.

- "This is a lifesaver – I finished my self-assessment in 30 minutes instead of 3 hours!"

- "Can we keep this forever?"

- One developer joked it was "the only reason I didn't spend my entire weekend on my review."

Within the first cycle, 1,800 employees used APEX Wrapped. The People Team noted earlier completion rates and more detailed, fact-based self-assessments. Productivity reclaimed equated to thousands of hours saved. Even if each user saved just 5 hours, that's ~9,000 hours returned to the business.

We had some hiccups with permissions (e.g. restricted Confluence pages not appearing), but quick support and patches resolved most issues.

Reflections

Working on APEX Wrapped reinforced how a scrappy idea can evolve into a meaningful product. Seeing colleagues embrace something I designed — and hearing it relieved their stress — was deeply rewarding.

If I could continue the project, I would:

- Involve end-users earlier: Blitz testing was useful, but bringing in a broader mix at ideation (e.g. managers, sales teams) could have surfaced needs like Manager Mode sooner.

- Deeper integration into Elevate: APEX Wrapped lived on a separate site. Ideally, it would be embedded directly into Atlassian's performance system — no copy-paste required.

- Expand data sources: We focused on Jira, Confluence, Atlas, and Kudos. But work also happens in Figma, Slack, and Bitbucket. Adding those (with privacy safeguards) would give an even fuller picture. Several users specifically asked, "Can it pull from Bitbucket or Slack?"

- Add celebratory insights: With more time, I'd build out the "Wrapped" metaphor — visual infographics like "You completed 28 Jira issues across 5 projects" — to make the process not just efficient but genuinely fun.

Overall, APEX Wrapped was a success. What started as a hackathon idea became an officially endorsed part of the APEX process. Instead of accepting the status quo ("performance reviews are awful"), our team challenged it and delivered a solution with measurable impact.